This might be the first in a series of posts exploring how historians can engage with AI tools. I have plenty of questions and only a few tentative answers. Please subscribe if you want to receive the next posts. If you're a historian dealing with similar questions, I'd like to hear about your experiences, you can reach me via lucaspoy@gmail.com.

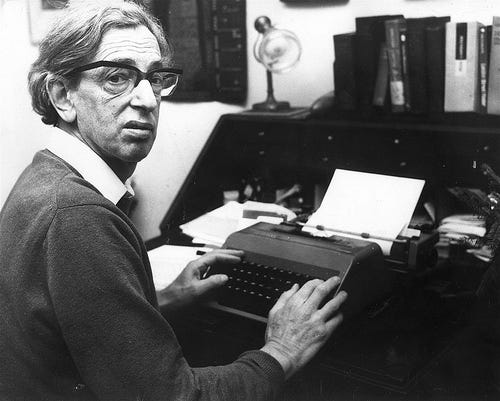

Photo: Eric Hobsbawm using technology to perform his job in January 1976.

In history departments, academic meetings, and articles on higher-education websites, we seem to be having the wrong conversation about generative AI. The tone of the discussion is purely defensive, sometimes even panicky. The conversation focuses on the “danger of cheating” and casts generative AI as something “the students” are using to avoid learning, to circumvent the hard work of thinking and writing; in a word, to undermine our efforts to educate them. In the worst cases, the very mentioning of AI tools falls like an existential threat that leads to total denial.

The problem gets worse when we talk about “AI” in completely abstract and often incorrect terms. We discuss it as if it’s a single, monolithic technology, frequently in ways that make it clear we’ve never actually used any of these tools. We conflate different models and capabilities, and we end up debating something we haven’t even bothered to try to understand. Many students, who do know a thing or two about these tools, must be having a good laugh seeing us panic. At least I would if I were young.

It often happens that we come across a student assignment with fabricated quotes or factual mistakes, clearly a result of a “hallucinated” AI text. The funny thing is that we seem to find some comfort in it. “Ah! See? It doesn’t work! We are going to be fine!” But here’s the thing: when students (or anyone else, for that matter) produce hallucinations, it’s not because they used AI; it’s because they used it poorly. This is like trying to eat soup with a fork and then declaring all cutlery useless. The problem wasn’t the tool, but our choice and usage.

In sum, I think we are tackling this the wrong way, for two reasons.

First, and most importantly, because students are not lazy, evil, or a conspiracy of cheaters. True, some students cheat, and have always done that, long before AI existed. But the overwhelming majority of students in our field (I can’t speak for other fields, but I assume it’s similar) are not here to “cheat their way through college”. They have a genuine interest in learning, they come to university because they like history, and they are curious about how to make the best of the possibilities available to them.

Second, because beyond everything else we should be curious. Critical, to be sure, but open to see what’s going on with new developments. Learn from the kids, why not. The defensive posture puts us as reactive guardians against a threat, not as proactive scholars seeking to understand a new and powerful tool.

***

Don’t get me wrong: the fear of hallucinations is real and justified. It’s alarming when we read papers with fabricated citations or made-up historical facts. But the root of the problem lies in a fundamental misunderstanding of what a Large Language Model (LLM) actually is. A LLM is not a database of facts. Trained on vast amounts of text, it learns the statistical relationships between words, becoming extraordinarily good at predicting what word should come next. If anything, it shows that most of the time we are quite predictable, and that when we say “Wake up and smell the…” the next word is quite likely to be “coffee”. A LLM is not designed to be accurate but to be plausible. It has no concept of truth or falsehood, only of linguistic patterns. When it invents a source, it’s just performing its function perfectly by generating text that looks like a real source.

I think we need to move beyond both the panic and the abstractions, and we need to do it quickly. As historians, we should be curious about these technologies, not just fearful of them. To even start a more logical conversation, we need to first understand what we’re actually dealing with. A good starting point is to have a very basic knowledge of the different types of tools often conflated behind the abstract concept of “AI”. What follows doesn’t go into any technical detail: it’s just a basic analysis of the readily available tools that anybody can access from their own browser as of July 2025, the time of writing.

***

1. Static LLMs (like ChatGPT in 2022)

I read once that you can think of this first type as a brilliant librarian in a library whose doors were sealed shut in 2022. This model (like the original ChatGPT that came out in late 2022 and started the current hype and panic) underwent a one-time “pre-training” on a finite dataset, freezing its knowledge at that moment. It works not by understanding facts, but by being a “next-token predictor”. It answers by calculating the most statistically plausible word to follow the previous one.

When you ask it a question, it is not querying a database. It is generating a linguistic performance that mimics the patterns in its data. When asked about events or sources after its knowledge cutoff, it will never say “I don't know”. Instead, its statistical model will generate text that looks like a plausible answer, often inventing names, dates, and citations with complete authority because the text “looks” correct from a pattern perspective.

2. Web-Connected LLMs (like modern ChatGPT, Gemini, Claude, etc.)

The first major upgrade, and the way most common tools work today, overcomes the “sealed library” problem by integrating a live search function. When you ask a question, the model’s internal tools formulate a search query and send it to a conventional search engine. The model then receives the top results, reads them in real-time, and synthesizes that information into a conversational answer. The risk of hallucinations is reduced a bit (although not completely, especially when using the free versions) but the quality of its answer is entirely dependent on the quality and bias of the top search results. It is excellent for finding information on recent events, but it can just as easily synthesize and present misinformation if that’s what a search algorithm surfaces.

3. Grounded LLMs with RAG (like NotebookLM)

This is fundamentally different. You feed the system a specific, curated set of your own documents, like your digitized primary sources, your PDFs of academic articles, your research notes, but also videos or just website links. The tool uses a framework called Retrieval-Augmented Generation (RAG) to restrict the answer to a specific set of documents that you provide.

When you upload your sources, the system indexes them, breaking them into chunks and converting them into a searchable “knowledge library”. When you ask a question, the tool first retrieves the most relevant passages from your documents only. It then “augments” your prompt, which means something like telling the model: “Answer this question using only the following excerpts”. This forces the model to base its response on the provided material, allowing for verifiable citations. Hallucinations are dramatically reduced, but it cannot discover new information outside of the sources you have given it. It’s like having a conversation with your own archive rather than with the entire internet.

Of all the readily accessible tools that are available out there for free and with a completely intuitive interface that requires almost no previous knowledge, I think NotebookLM is the most powerful one for us as historians. It can create very decent summaries of primary and secondary sources uploaded by the user, literature reviews of up to 50 sources at the same time, it can create exam questions with rubrics, and many more things.

4. Deep Research Systems

This is the latest evolution, acting as an “agentic” or autonomous system (like Gemini or ChatGPT with “Deep Research” options, usually only available in the premium versions). Given a prompt, this system independently creates a multi-step research plan. It then executes dozens of parallel web searches, reads the initial findings, identifies gaps or new lines of inquiry, and autonomously generates new, more specific queries to dig deeper. It then synthesizes all of this iterative research into a single, structured report, which usually takes around 10 minutes to be finished. Its primary risk is opaque methodology. The final output can be quite good, but the process is a black box. You don’t see the dozens of micro-decisions the agent made about which sources to pursue, which to trust, and which to ignore, requiring even more rigorous verification from the historian.

***

My point is that understanding these (very basic) distinctions changes how we think about AI’s role in historical work. A static LLM might be useful for brainstorming or explaining concepts, but it’s dangerous for factual research. A grounded system like NotebookLM can help you synthesize your own sources but won’t discover new ones. A deep research system might uncover ideas, texts, or authors you hadn’t considered, but you’ll need to verify everything it finds.

In future posts I’d like to address in more detail what this all means for us as teachers. I think the “solution” is not as far from us as we think. It is mostly about going back to the core of our task and restructuring the constructive alignment that should exist between the goals of our teaching (in terms of contents and skills) and the assessment forms we devise to test if those goals have been achieved. Spoiler: I do think that many take-home writing assignments might have to disappear, and that more oral assessments should come to the fore.

In any case, to start a more meaningful conversation we need to understand these tools if we’re going to make informed decisions about them. Most of our students are already using them, and pretending they don’t exist won’t make these tools go away.

Thank you for the very insightful reflections, Lucas. Obrigada! As a disabled (chronically ill) academic, I do use AI tools to help me with work. I still know little about AI different tools but am very interested in getting to know more. Technology is important for people with disabilities, who are often overlooked in academia.